Belen Moncalvillo

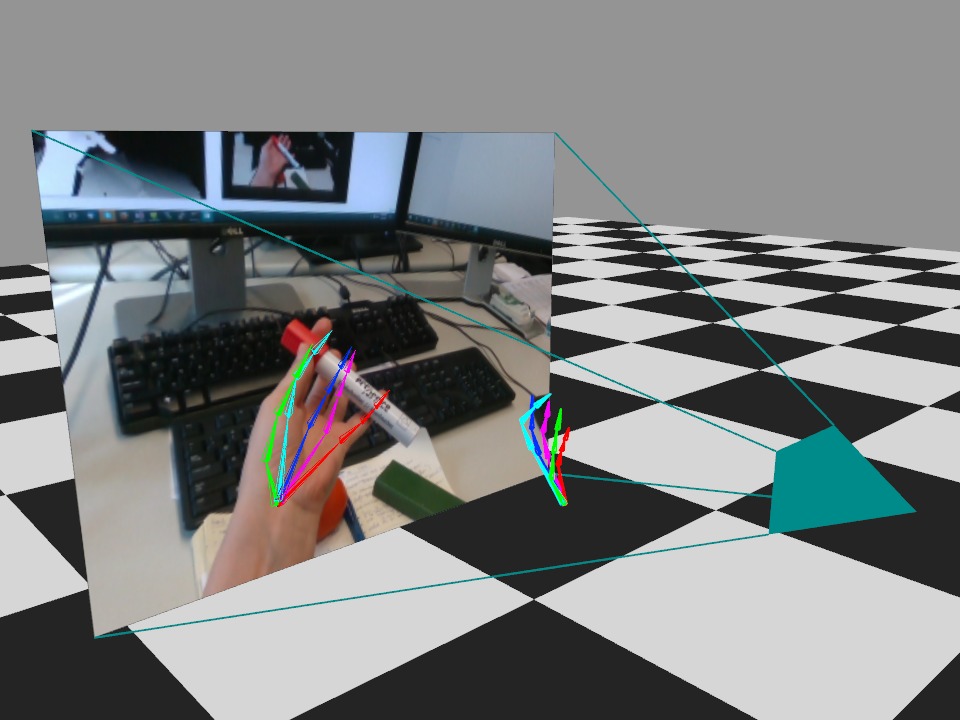

Getting a computer to recognize the movements and position of the hands has been a great computing challenge that until now required specialized cameras or devices that record from various angles. Recently, the URJC has participated in a research project, led by the Max Planck Institute of Informatics in Germany, to develop a method that manages to identify the position of all the fingers and joints of a hand in real time from videos. "This advance opens the doors to all kinds of remote control in a gestural way, the study of hand movements and interaction with virtual worlds", highlights Dan Casas, Marie Curie researcher at the URJC Multimodal Simulation Laboratory.

This technology, presented at the prestigious Computer Vision and Pattern Recognition Conference (CVPR, for its acronym in English) in Utah (USA) last June, will allow us to increase the volume of the radio or change the station accurately without the need for contact. In addition, this algorithm will contribute to improving the animation and handling of video games and virtual reality environments, areas in which the URJC is a pioneer.

On the other hand, hand recognition could be used in other fields such as medicine to improve the control of remote surgical devices or detect health problems, such as stress or depression, from the gestures of patients. This tool also offers new possibilities for the digital interpretation of sign language.

Teaching a computer to see in depth

Visualizing the position of the hands digitally is a particularly complicated task due to the fact that each hand has a large number of joints, the speed with which we move them and, above all, the estimation of depth from a two-dimensional image. "In a video in which a hand appears to move naturally, in most frames part of the fingers are covered by other fingers," explains Casas. To solve this problem, the researchers used a machine learning algorithm capable of 'learning' by observing many examples, as Dan Casas underlines: “It is a behavior similar to that of humans themselves. Faced with a new task, such as walking, we are able to imitate it by observing and trying many times”. The training of this algorithm involved a large number of frames in which the position of each of the joints of the hands was indicated. They even invented another algorithm that generated realistic images of hands for this purpose.

This study has achieved that, for the first time in the field of Computer Vision and Artificial Intelligence, we are able to immediately detect where all the fingers of a hand are in motion from material as accessible as videos recorded with a mobile phone, webcam or from YouTube.